Presented at The Developing Group 5 Apr 2008

“The only thing I know is that I do not know” – Socrates

The following is based on ideas from The Black Swan: The Impact of the Highly Improbable by Nassim Nicholas Taleb (Hardback published by Random House (US) and Allen Lane (UK), 2007. Paperback published by Penguin, 2008)

Before I add any comments, let’s let the man speak for himself. The following has been extracted from The Prologue of The Black Swan. It is a brilliant summary of the main themes in the book — which I urge you to read in full. After that I summerise Taleb’s ideas and to relate them to the rationale of the Developing Group.

The next Developing Group will focus on ‘positive’ Black Swans — Maximising Serendipity:The art of recognising and fostering potential.

In Taleb's own words

(pp. xvii-xxviii)

On the plumage of birds

Before this discovery of Australia, people in the Old World were convinced that all swans were white, an unassailable belief as it seemed completely confirmed by empirical evidence. The sighting of the first black swan might have been an interesting surprise for a few ornithologists, but that is not where the significance of the story lies. It illustrates a severe limitation to our learning from observations or experience and the fragility of our knowledge. One single observation can invalidate a general statement derived from millennia of confirmatory sightings of millions of white swans. All you need is one single black bird.

A Black Swan is an event with the following three attributes:

First, it is an outlier, as it lies outside the realm of regular expectations, because nothing in the past can convincingly point to its possibility. Second, it carries an extreme impact. Third, in spite of its outlier status, human nature makes us concoct explanations for it’s occurrence after the fact, making it [seem] explainable and predictable.

I stop and summarize the triplet: rarity, extreme impact, and retrospective (though not prospective) predictability. A small number of Black Swans explain almost everything in our world, from the success of ideas and religions, to the dynamics of historical events, to elements of our own personal lives. Ever since we left the Pleistocene, some ten millennia ago, the effect of these Black Swans has been increasing. It started accelerating during the industrial revolution, as the world started getting more complicated, while ordinary events, the ones we study and discuss and try to predict from reading the newspapers, have become increasingly inconsequential.

Just image how little your understanding of the world on the eve of events of 1914 would have helped you guess what was to happen next. How about the rise of Hitler and the subsequent war? How about the precipitous decline of the Soviet bloc? How about the rise of Islamic fundamentalism? How about the spread of the Internet? How about the market crash of 1987 (and the more unexpected recovery)? Fads, epidemics, fashion, ideas, the emergence of art genres and schools. All follow these Black swan dynamics. Literally, just about everything of significance around you might qualify.

It is easy to see that life is the cumulative effect of a handful of significant shocks. It is not so hard to identify the role of Black Swans, from your armchair (or bar stool). Go through the following exercise. Look into your own existence. Count the significant events, the technological changes, and the innovations that have taken place in our environment since you were born and compare them to what was expected before their advent. How many of them came on schedule?

Experts and “empty suits”

Our inability to predict in environments subjected to the Black Swan, coupled with a general lack of awareness of this state of affairs, means that certain professionals, while believing they are experts, are in fact not. Based on their empirical record, they do not know more about their subject matter than the general populations, but they are much better at narrating — or, worse, at smoking you with mathematical models. They are also more likely to wear a tie.

Black Swans being unpredictable, we need to adjust to their existence (rather than naively try to predict them). There are so many things we can do if we focus on anti-knowledge, or what we do not know. You can set yourself up to collect serendipitous Black Swans (of the positive kind) by maximising your exposure to them. Indeed, in some domains — such as scientific discovery and venture capital investments — there is a disproportionate payoff from the unknown, since you typically have little to lose and plenty to gain from a rare event. Contrary to social-science wisdom, almost no discovery, no technologies of note, came from design and planning — they were just Black Swans. The strategy is, then, to tinker as much as possible and try to collect as many Black Swan opportunities as you can.

What you do not know

Black Swan logic makes what you don’t know far more relevant than what you do know.

The combination of low predictability and large impact makes the Black Swan a great puzzle; but that is not yet the core concern of this book. The central idea of this book concerns our blindness with respect to randomness, particularly large deviations, in spite of the obvious evidence of their huge influence.

What I call Platonicity is what makes us think that we understand more than we actually do. I am not saying that Platonic forms don’t exist. Models and constructions, these intellectual maps of reality, are not always wrong. The difficulty is that a) you do not know beforehand (only after the fact) where the map will be wrong, and b) the mistakes can lead to severe consequences. These models are like potentially helpful medicines that carry random but very severe side effects.

Learning to learn

We do not spontaneously learn that we don’t learn that we don’t learn. The problem lies in the structure of our minds: we don’t learn rules, just facts, and only facts. Meta-rules (such as the rule that we have a tendency to not learn rules) we don’t seem to be good at getting.

Life is very unusual

Almost everything in social life is produced by rare but consequential shocks and jumps; all the while almost everything studied about social life focuses on the “normal”, particularly with “bell curve” methods of inference that tell you close to nothing. The bell curve ignores large deviations, cannot handle them, yet makes us confident that we have tamed uncertainty.

The bottom line

The beast in this book is not just the bell curve and self-deceiving statisticians, nor the Platonified scholar who needs theories to fool himself with. It is the drive to “focus” on what makes sense to us. Living on our planet, today, requires a lot more imagination than we are made to have.

To summarize: in this (personal) essay, I claim that our world is dominated by the extreme, the unknown, and the very improbable — and all the while we spend our time engaged in small talk, focussing on the known, and the repeated. This implies the need to use the extreme event as a starting point and not treat it as an exception to be pushed under the rug. I also make the bolder claim that in spite of our progress and growth, the future will be increasingly less predictable, while both human nature and social “science” seem to conspire to hide this idea from us.

Examples of Black Swan events:

“In the last 50 years, the ten most extreme days in the US stock market represents half of all returns.” (p. 275, Taleb)

“September 1987, the Dow Jones fell by almost a third in less than a week, with just a single day showing a collapse of over 20 percent.” (p. 173, Ormerod)

In just 15 years Enron grew from nowhere to become America’s seventh largest company. Fortune magazine named Enron ‘America’s Most Innovative Company’ for six consecutive years from 1996 to 2001. By the end of that year, Enron had declared itself bankrupt leaving behind $31.8bn (£18bn) of debts, its shares worthless, and 21,000 workers around the world lost their jobs. (news.bbc.co.uk/1/hi/business/3398913.stm)

September 11, 2001

November 9, 1989 — The fall of Berlin Wall (and the disintegration of the Soviet Block)

Indian Ocean tsunami of December 2004.

Today’s sub-prime credit crisis.

Penicillin was mold that Alexander Fleming happened to notice.

When the laser was first invented it had no known application.

According to Taleb, as a rule ‘positive’ Black Swans start slowly and grow over time, ‘negative’ Black Swans make their impact felt almost instantly.

Three Types of Swan

Taleb distinguishes between three types of unexpected event:

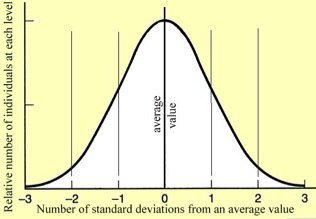

White Swans result from the ‘Normal’ randomness of a Gaussian Distribution (Bell Curve) circumstance, called Mediocristan by Taleb. This is the land of the known unknown. It’s main features are:

White Swans result from the ‘Normal’ randomness of a Gaussian Distribution (Bell Curve) circumstance, called Mediocristan by Taleb. This is the land of the known unknown. It’s main features are:

- Averages have an empirical reality

- The likelihood of an extreme event reduces at a faster and faster rate as you move away from the average

- This effectively puts an upper and lower limit on the scale of an event (people can only be so tall or so short)

- Outliers are therefore extremely unlikely

- No single event significantly affects the aggregate (you can’t get fat in one day)

Because probabilities are known, the chance or risk of an unexpected event can be calculated precisely, e.g. Weight, height (most anything in nature that grows), coin tossing, IQ scores.

Grey Swans result from circumstances where Power Law (Fractal or Fat Tailed) randomness occurs, called Extremistan by Taleb. This is the province of the somewhat known unknown. Its main features are:

- Although probability of events follow a pattern this does not give precise predictability (think of forecasting the weather)

- Averages are meaningless because the range of possible events is so large

- The likelihood of an extreme event reduces more and more slowly as you move away from the most frequent event (that’s why they are said to have ‘fat’ or ‘long tails’)

- This means there is no upper limit (Bill Gates could be worth twice, three, four … times as much)

- Outliers are therefore much more likely than you might think

- One single event can change the whole picture (if Bill Gates is in your sample of wealth, no one else matters)

e.g. Magnitude of earthquakes, blockbuster books and movies, stock market crashes, distribution of wealth, extinctions (species and companies), population of cities, frequency of words in a text, epidemics, casualties in wars, pages on the WWW.

Black Swans result from all those forms of nonlinear randomness that we do not know about (and possibly never will). We are in the realm of the unknown unknown. We have no means of calculating the likelihood of an event. Everything about Extremistan applies without any probability distributions to guide us. A Black Swan:

Black Swans result from all those forms of nonlinear randomness that we do not know about (and possibly never will). We are in the realm of the unknown unknown. We have no means of calculating the likelihood of an event. Everything about Extremistan applies without any probability distributions to guide us. A Black Swan:

- Is an outlier that lies outside the realm of regular expectations (because nothing in the past can convincingly point to its possibility)

- Carries extreme impact (so much so that at the time, if it is a ‘negative’ Black Swan, almost nothing else matters)

Gets explained after the event, therefore making it look like we could have predicted it, (if only …).

This fools us into thinking someone (an ‘expert’) might be able to predict the next Black Swan, which in itself increases the chances of another one catching us unawares in the future.

What has this got to do with Clean?

Concepts within the fields of emergence, networks, and self-organising systems related to Black Swan Logic:

- Nonlinearity, Fat tails, Power Laws, Fractals

- Punctuated Equilibrium

- Thresholds

- Tipping Points

- Contagions

Related topics:

If you have been attending the Developing Group for a while (or reading the notes on this web site), you’ll have recognised that we’ve covered many of this ideas before. Previous Developing Group days that have touched on Black Swan thinking are:

2002 Feb 16 What is Emergence?

2004 Feb 7 Self-Deception, Self-Delusion and Self-Denial

2004 Jun 5 Thinking Networks – Part 1 (& Part 2 – Jun 3, 2006)

2005 Jun 4 Feedback Loops

2006 Apr 1 Becausation

Topics within the work of David Grove and it’s derivatives related to Black Swan thinking:

- Not Knowing

- Defining Moments

- Unpredictability of change

- Maturing Changes

- Bottom-up modelling

- Recursion and feedback loops

- Noticing the idiosyncratic

- “Looking for what’s not there, that has to be there, for what is there to exit” (Grove)

Expression that may indicate a personal Black Swan event:

It came out of the blue / left field.

I was totally unprepared for it.

It hit me like a thunderbolt.

It was off the scale.

Nothing like that had ever happened to me before.

It was a one in a million chance.

I never thought it could happen.

Things were going along so nicely.

I just happened to be in the right place at the right time.

I hadn’t bargained for that.

It was an act of God.

I was astonished / shocked.

No one anticipated it.

It arrived unannounced.

How was I suppose to know?

I must be cursed.

Not again!

I was taken aback by the sheer size of it.

I just wasn’t expecting that.

I didn’t think i was taking a risk.

Totally unforeseen.

Who would have thought?

No one warned me this might happen.

I felt so safe.

How lucky can you get?

It never occurred to us.

My whole world turned upside down.

I never thought in my wildest dreams this would come up.

I see the ideas in Taleb’s book arranging them self into four levels:

I see the ideas in Taleb’s book arranging them self into four levels:

I. The nature of uncertainty, unpredictability and randomness.

II. How we get fooled by I

III. Why we don’t seem to learn from II

IV. And how we can.

[Note the parallels with the four levels of Self-deception, delusion and denial.]

LEVEL I

The nature of uncertainty, unpredictability and randomness

Randomness:

“Randomness is the result of incomplete information at some layer. It is functionally indistinguishable from ‘true’ or ‘physical’ randomness. Simply, what I cannot guess is random because my knowledge about causes is incomplete, not necessarily because the process has truly unpredictable properties.” (p. 308-9, Taleb)

The Bell Curve (‘Normal’ or Gaussian distribution):

“We can make good use of the Gaussian approach in variables for which there is a rational reason for the largest not to be too far away from the average. If there is a gravity pulling numbers down, or there are physical limitations preventing very large observations, [or] there are strong forces of equilibrium bringing things back rather rapidly after conditions diverge from equilibrium.

Note that I am not telling you that Mediocristan does not allow for some extremes. But it tells you that they are so rare that they do not play a significant role in the total. The effect of such extremes is pitifully small and decreases as your population gets larger.

In Mediocristan, as your sample size increases, the observed average will present itself with less and less dispersion — uncertainty in Mediocristan vanishes. This is the ‘law of large numbers’.” (pp. 236-8, Taleb)

The empirical rule for normal distributions is that:

68% of the population lie between -1 and +1 standard deviations

95% of the population lie between -2 and +2 standard deviations

99.7% of the population lie between -3 and +3 standard deviations

Power Law, Fractal, Fat-Tail distribution:

“Dramatic, large-scale events are [always] far less frequent than small ones. In systems characterized by power-law behaviour, however, they can occur at any time and for no particular reason. Sometimes, a very small event can have profound consequences, and occasionally a big shock can be contained and be of little import. It is not the power law itself with gives rise to these unexpected features of causality; rather it is the fact that we observe a power law in a system which tells us that causality within it behaves in this way. The conventional way of thinking, which postulates a link between the size of an event and its consequences, is broken.” (p. 170, Ormerod)

Problem of Induction:

“How can we logically go from specific instances to reach general conclusions?” (p. 40, Taleb)

This is not a ‘chicken and egg’ problem, it is a ‘chicken and death’ problem: Everyday of a chicken’s life it has more and more evidence that because humans feed, house and protect it they are benign and look out for its best interests — until the day it gets its neck rung.

Circularity of statistics:

“We need data to discover a probability distribution. How do we know if we have enough? From the probability distribution. If it is Gaussian, then a few points of data will suffice. How do we know it is Gaussian? From the data. So we need data to tell us what probability distribution to assume, and we need a probability distribution to tell us how much data we need. This causes a severe regress argument, which is somewhat shamelessly circumvented by resorting to the Gaussian and its kin.” (p. 310, Taleb)

Non-repeatability:

Black Swan events are always unique and therefore not subject to testing in repeatable experiments.

Complex systems:

LEVEL II

How we get fooled by uncertainty, unpredictability and randomness

Black Swan blindness:

“The underestimation of the role of the Black Swan, and occasional overestimation of a specific one.” (p. 307, Taleb).

This means that the effects of extreme events are even greater because we think they are more unexpected than they actually are. As an example, we do not distinguish between ‘risk’, which technically, can be calculated, and ‘uncertainty’ which cannot. Taleb maintains that the ‘high’, ‘medium’ and ‘low’ risk options offered to you by your financial adviser are a fiction because they are all based on ‘normal’ distributions that ignore the effect of Black Swans.

Confirmation bias:

“You look for instances that confirm your beliefs, your construction (or model) — and find them.” (p. 308, Taleb)

Part of the bias comes from our overvaluing what we find that confirms our model and undervaluing or ignoring anything that challenges our model.

Fallacy of silent evidence:

“Looking at history, we do not see the full story, only the rosier parts of the process.” (p. 308, Taleb) Ignoring silent evidence is complementary to the Confirmation Bias. “Our perceptual system may not react to what does not lie in front of our eyes. The unconscious part of our inferential mechanism will ignore the cemetery, even if we are intellectually aware of the need to take it into account. Out of sight, out of mind: we harbor a natural, even physical, scorn of the abstract.” [and randomness and uncertainty certainly are abstractions] (p. 121, Taleb).

By “the cemetery” Taleb means: The losers of wars don’t get to write history; Only survivors tell their story; Prevented problems are ignored; Only fossils that are found contribute to theories of evolution; Undiscovered places do not get onto the map; Unread books do not form part of our knowledge.

Apparently 80 per cent of epidemiological studies fail to replicate, but most of these studies don’t get published so we think causal relationships are more common than they are.

Fooled by Randomness:

“The general confusion between luck and determinism, which leads to a variety of superstitions with practical consequences, such as the belief that higher earnings in some professions are generated by skills when there is a significant component of luck in them.” (p. 308, Taleb)

We have a hard time seeing animals as randomly selected, but even when we can accept that, we are even less likely to accept that the design of a car or a computer could be the product of a random process. Also, with so many people in the financial markets, there will be lots of “spurious winners.” Trying to become a winner in the stock market is hard, because even if you are smart, you are competing with all the spurious winners.

Future Blindness:

“Our natural inability to take into account the properties of the future” (p. 308, Taleb)

Our inability to conceive of a future that contains events that have never happened in the past — just think how difficult it can be to help some people associate into a desired outcome of their own choosing!

We can use Robert Dilts’ Jungle Gym model to postulate that Future Blindness is equivalent to Perceptual Position Blindness (e.g. the inability to take into account the mind of someone else — one of the features of autism) and Logical Level Blindness — the inability to notice the qualitative differences occurring at different levels (e.g. using sub-atomic theory to ‘explain’ human behaviour). Gregory Bateson frequently griped about Logical Level Blindness, and it is a characteristic of people Ken Wilber calls “flatlanders”.

Lottery-ticket fallacy:

“The naive analogy equating an investment in collecting positive Black Swans to the accumulation of lottery tickets.” (p. 308, Taleb)

The probability of winning the lottery is known precisely and is therefore not a Black Swan — just unlikely.

Locke’s madman:

“Someone who makes impeccable and rigorous reasoning from faulty premises thus producing phony models of uncertainty that make us vulnerable to Black Swans.” (p. 308, Taleb).

As examples, both Taleb and Ormerod pick out a couple of Nobel prize-winning economists (Robert Merton Jr. and Myron Scholes) who did much to promote the Modern Portfolio Theory. In the summer of 1998, as a result of having applied their theories, their company, LTCM, could not cope with the Black Swan of the Russian financial crisis and “one of the largest trading losses ever in history took place in almost the blink of an eye … LTCM went bust and almost took down the entire financial system with it.” (p. 44 & p. 282, Taleb) However that’s not the real story. The more important question is, why ten years after the failure of LTCM, is the Modern Portfolio Theory still the dominant model taught at universities and business schools?

Ludic fallacy:

Using “the narrow world of games and dice” to predict real life. In Real life “randomness has an additional layer of uncertainty concerning the rules of the game.” (p. 309, Taleb).

Narrative fallacy:

“Our need to fit a story or pattern to a series of connected or disconnected facts.” (p. 309, Taleb)

i.e. to explain past events. Note, the plausibility of the story is not a factor. The fallacy is forgetting any narrative is only one of many possible narratives that could be made to fit ‘the facts’ and therefore believing we understand ‘the cause’.

Nonlinearity:

“Our emotional apparatus is designed for linear causality. With counter intuitive: linearities, relationships between variables are clear, crisp, and constant, therefore Platonically easy to grasp in a single sentence. In a primitive environment, the relevant is the sensational. This applies to our knowledge. When we try to collect information about the world around us, we tend to be guided by our biology, and our attention flows effortlessly toward the sensational — not the relevant. Our intuitions are not cut out for nonlinearities. Nonlinear relationships can vary; they cannot be expressed verbally in a way that does justice to them. These nonlinear relationships are ubiquitous in [modern] life. Linear relationships are truly the exception; we only focus on them in classrooms and textbooks because they are easier to understand.” (extracts from pp. 87-89, Taleb)

Platonic fallacy:

Has several manifestations:

- “the focus on those pure, well-defined, and easily discernible objects like triangles” and believing those models apply to “more social notions like friendship or love”;

- focusing on categories “like friendship and love, at the cost of ignoring those objects of seemingly messier and less tractable structures”; and

the belief that “what cannot be Platonized [put into well-defined ‘forms’] and studied does not exist at all, or is not worth considering.” (p. 309, Taleb)

Good examples are the importance we attach to numbers (did you know that 76.27% of all statistics are made up?), and how much better we feel when we have a label for an illness.

Retrospective distortion (Hindsight bias):

“Examining past events without adjusting for the forward passage of time leads to the illusion of posterior predictability.” (p. 310, Taleb)

Commonly known as ‘hindsight’, we think we could have predicted events, if only we known what we know now. Most of what people look for, they do not find; most of what they find, they did not look for. But hindsight bias means discoveries and inventions appear to be more planned and systematic than they really are. As a result we underestimate serendipity and the accidental.

Reverse-engineering problem:

“It is easier to predict how an ice cube would melt into a puddle than, looking at a puddle, to guess the shape of the ice cube that may have caused it. This ‘inverse problem’ makes narrative disciplines and accounts (such as histories) suspicious.” (p. 310, Taleb)

Round-trip fallacy:

“The confusion of absence of evidence of Black Swans (or something else) for evidence of absence of Black Swans (or something else).” (p. 310, Taleb)

Just because you have never seen a Black Swan doesn’t mean they don’t exist.

Toxic knowledge:

“Additional knowledge of the minutiae of daily business can be useless, even actually toxic. The more information you give someone, the more hypothesis they will formulate along the way, and the worse off they will be. They see more random noise and mistake it for information. The problem is that our ideas are sticky: once we produce a theory, we are not likely to change our minds — so those that delay developing their theories are better off. When you develop your opinions on the basis of weak evidence, you will have difficulty interpreting subsequent information that contradicts these opinions, even if this new information is obviously more accurate. Two mechanisms are at play here: Confirmation bias and belief perseverance. Remember, we treat ideas like possessions, and it will be hard for us to part with them.” (p. 144, Taleb)

My summary of Level II - How we get fooled:

UNDERESTIMATE | OVERESTIMATE |

| Unknown | Known |

| Uncertain | Certain |

| Unpredictable | Predictable |

| Uncontrollable | Controllable |

| Unplanable | Planning & preparedness |

| Random | Causal |

| Ignorance | Expertise |

| Effect of a single extreme | Rate of incremental change |

| Nonlinearities | Linearities |

| Power Laws, Fractals, Fat Tails | Averages & Bell Curves |

| Forward march of time | Backward view of history |

| Relevant | Sensational |

| Luck | Skill |

| The ‘impossible’ | The ‘possible’ |

| Commitment to our own models | Ability to be objective |

| Subjectivity | Objectivity |

| Messy reality | Neat constructs |

| Illogical evidence | Logical reasoning |

| Complexity | Simplicity |

| The idiosyncratic | The norm |

| Empirical evidence | Relevance of stories |

| Failure | Success |

LEVEL III

Why we don’t seem to learn that we are fooled by uncertainty, unpredictability and randomness

Black Swan ethical problem:

“There is an asymmetry between the rewards of those who prevent and those who cure.” (p. 308, Taleb)

The former are ignored; the latter highly decorated. Would we have rewarded the person who made aircraft manufacturers fit security locks to cockpit doors before 9/11? Hence it pays to be a problem solver. Similarly we pay forecasters who use complicated mathematics much more than forecasters who say “We don’t know”. Therefore it pays to forecast. Both these asymmetries increase the likelihood of negative Black Swans.

Expert problem:

“Some professionals have no differential abilities from the rest of the population, but against their empirical records, are believed to be experts: clinical psychologists, academic economists, risk ‘experts’, statisticians, political analysts, financial ‘experts’, military analysts, CEOs etc. They dress up their expertise in beautiful language, jargon, mathematics, and often wear expensive suits.” (p. 308, Taleb)

Scandal of prediction:

“The lack of awareness of their own poor predicting record in some forecasting entities (particularly the narrative disciplines).” (p. 310, Taleb) “What matters is not how often you are right, but how large your cumulative errors are. And these cumulative errors depend largely on the big surprises, the big opportunities.” (p. 149, Taleb)

Common ways we blame others for our poor predictive abilities:

- I was just doing what everyone else does. (The “herd”, or maybe the “nerd”, effect.)

- I was almost right.

- I was playing a different game.

- No one could have forecast that.

- Other than that, it was okay.

- They didn’t do what they should have.

- If only I’d known X.

- But the figures said …

Epistemic arrogance:

Arrogance is the result of

“A double effect: we overestimate what we know, and we underestimate uncertainty, by compressing the range of possible uncertain states (i.e. by reducing [in our mind] the space of the unknown).” (p. 140, Taleb)

And I would add to Taleb’s list:

Comfort of certainty:

We’d rather have a story that makes us comfortable than face the discomfort of uncertainty.

Uncertainty quickly becomes existential:

I have observed that, generally, when we consider:

- what we know in relation to what we don’t know

- our ability to predict in the face of what’s unpredictable

- how much we can control as opposed to what’s uncontrollable

how much effect we can have compared to a Black Swan event

we are faced with the nature of our individual existence in relation to the Cosmos … Oh my God!

LEVEL IV

How we can learn from how we are fooled by uncertainty, unpredictability and randomness

Maximize serendipity:

“A strategy of seeking gains by collecting positive accidents from maximising exposure to ‘good Black Swans’.” (p. 307, Taleb)

Taleb calls this an “Apelles-style strategy”. Apelles the Painter was a Greek who, try as he might, could not depict the foam from a horse’s mouth. In irritation he gave up and threw the sponge he used to clean his brush at the picture. Where the sponge hit, it left a beautiful representation of foam.

Despite the billions of dollars spent on cancer research, the single most valuable cancer drug discovered to date, chemotherapy, was a by-product of mustard gas used in the First World War.

Taleb recommends living in a city and going to parties to increase the possibility of serendipity. [Other examples: speed dating, holding liquidity, write articles, newspapers, sowing seeds]

Use a barbell strategy:

“A method that consists of taking both a defensive attitude and an excessively aggressive one at the same time, by protecting assets from all sources of uncertainty while allocating a small portion for high-risk strategies.” (p. 307, Taleb)

“What you should avoid is unnecessary dependence on large-scale predictions — those and only those. Avoid the big subjects that may hurt your future: be fooled in small matters, not large. Know how to rank beliefs not according to their plausibility but by the harm they may cause. Knowing that you cannot predict does not mean that you cannot benefit from unpredictability. The bottom line: be prepared! Narrow-minded prediction has an analgesic effect. Be aware of the numbing effect of magic numbers. Be prepared for all relevant eventualities.” (p. 203, Taleb)

Taleb suggests that rather than putting your money in ‘medium risk’ investments (see Black Swan Blindness) “you need to put a portion, say 85-90 percent, in extremely safe instruments, like Treasury Bills. The remaining 10-15 percent you put in extremely speculative bets, preferably venture capital-style portfolios. (Have as many of these small bets as you can conceivably have; avoid being blinded by the vividness of one single Black Swan.)” (p. 205, Taleb)

Taleb lists a number of “modest tricks”. He says to “note the more modest they are the more effective they will be”:

a. Make a distinction between positive and negative contingencies.

b. Don’t look for the precise and the local — invest in preparedness, not prediction.

c. Seize any opportunity or anything that looks like an opportunity.

d. Beware of precise plans by governments.

e. Don’t waste your time trying to fight forecasters, economists etc. (pp. 206-210, Taleb)

Accept random causes:

Avoid the silent evidence fallacy, “Whenever your survival is in play, don’t immediately look for causes and effects. The main reason for our survival might simply be inaccessible to us. We think it is smarter to say because than to accept randomness.” (p. 120, Taleb)

By “survival” Taleb means not only your physical survival from, say, disease, but also survival of your company, survival as an employee, survival of your marriage, survival of your success or status, etc.

De-narrate:

“Shut down the television set, minimize time spent reading newspapers, ignore blogs” because the more news you listen to and newspapers you read, the more your view will converge on the general view — and the greater the tendency to underestimate extreme events.

Account for emotions:

“Train your reasoning abilities to control your decisions. Train yourself to spot the difference between the sensational and the empirical.” (p. 133, Taleb) “Most of our mistakes in reasoning come from using [intuition] when we are in fact thinking that we are using [the cogitative]” (p. 82, Taleb).

Taleb’s point is that our emotional responses will unconsciously dominate unless we take that into account when we make decisions in the nonlinear world of Extremistan.

Avoid tunneling:

“The neglect of sources of uncertainty outside the plan itself.” (p. 156, Taleb)

Question the error rate:

“No matter what anyone tells you, it is a good idea to question the error rate of an expert’s procedure. Do not question his procedure, only his confidence. A hernia surgeon will rarely know less about hernias than [you]. But their probabilities, on the other hand, will be off — and this is the disturbing point, you may know much more on that score than the expert.” (p. 145, Taleb)

Recognize self-delusion:

“You cannot ignore self-delusion. The problem with experts is that they do not know what they do not know. Lack of knowledge and delusion about the quality of your knowledge come together — the same process that makes you know less also makes you satisfied with your knowledge.” (p. 147, Taleb)

Also see an article by Penny Tompkins and myself on Self-deception, delusion and denial.

Use Stochastic tinkering:

Use a lot of trial and error. To do that you have to learn to love to lose, to be wrong, to be mistaken — and keep trialling.

Personally, I prefer the term trial-and-feedback because it presupposes the noticing of ‘errors’ and acting on that awareness. Wikipedia is a living example of stochastic tinkering.

Focus on Consequences:

“The idea that in order to make a decision you need to focus on the consequences (which you can know) rather than the probability (which you can’t know) is the central idea of uncertainty. Much of my life is based on it.” (p.211, Taleb)

Foster cognitive diversity:

“The variability in views and methods acts like an engine for tinkering. It works like evolution. By subverting the big structures we also get rid of Platonified one way of doing things — in the end, the bottom-up theory-free empiricist should prevail.” (p.224-5, Taleb)

My conclusion?

David Grove was himself a Black Swan …

Further Reading

There is a summary of the book at: en.wikipedia.org/.

If you prefer to listen rather than read there is a 1:23 hour podcast at:

www.econtalk.org/archives/2007/04/taleb_on_black.html

Nassim Nicholas Taleb’s Home Page, www.fooledbyrandomness.com has links to many articles and reviews.

For an article on unexpected creativity in businesses, see:

‘Corporate Creativity: It’s Not What You Expect’, Alan G. Robinson and Sam Stern. Innovative Leader, Volume 6, Number 10, October 1997.

Other books that have contributed to my knowledge of this subject (Note, I am not listing all the books on the subject that I haven’t read!):

DIRECTLY RELATED

Philip Ball, Critical Mass: How one thing leads to another ( 2005).

Mark Buchanan, Ubiquity: Why catastrophes happen (2002)

James Gleick, Chaos: The amazing science of the unpredictable (1998).

Paul Ormerod, Why Most things Fail … an how to avoid it (2006).

Nassim Nicholas Taleb, Fooled by Randomness: The hidden role of chance in the markets and life (2001).

Mark Ward, Universality: The underlying theory behind life, the universe and everything (2002)

CLOSELY RELATED

Albert-Laszlo Barabasi, Linked: How everything is connected (2003).

Mark Buchanan, Nexus: Small worlds and the science of networks (2002)

Fritjof Capra, The Web of Life: A new synthesis of mind and matter (1996).

Fritjof Capra, Hidden Connections: Integrating the biological, cognitive and social (2002).

Jack Cohen & Ian Stewart, The Collapse of Chaos: Discovering simplicity in a complex world (1995).

Murray Gell-Mann, The Quark and the Jaguar: Adventures in the simple and the complex (1995).

Malcolm Gladwell, The Tipping Point: How little things can make a big difference (2002).

Neil Johnson, Two’s Company, Three’s Complexity (2007)

Bart Kosko, Fuzzy thinking: The nw science of fuzzy thinking (1994).

Roger Lewin, Complexity: Life at the edge of Chaos (1993).

Steven Johnson, Emergence: The connected lives of ants, brains, cities and software (2001).

Steven Strogatz, Sync: Rhythms of nature, rhythms of ourselves (2003).

Duncan Watts, Six Degrees: The Science of a connected age (2003).

Stephen Wolfram, A New Kind of Science (2002).

The next Developing Group will continue to focus on Level IV — Maximising Serendipity:The art of recognising and fostering potential.